UX Research / Product Strategy / UI

AI Lead Nurture

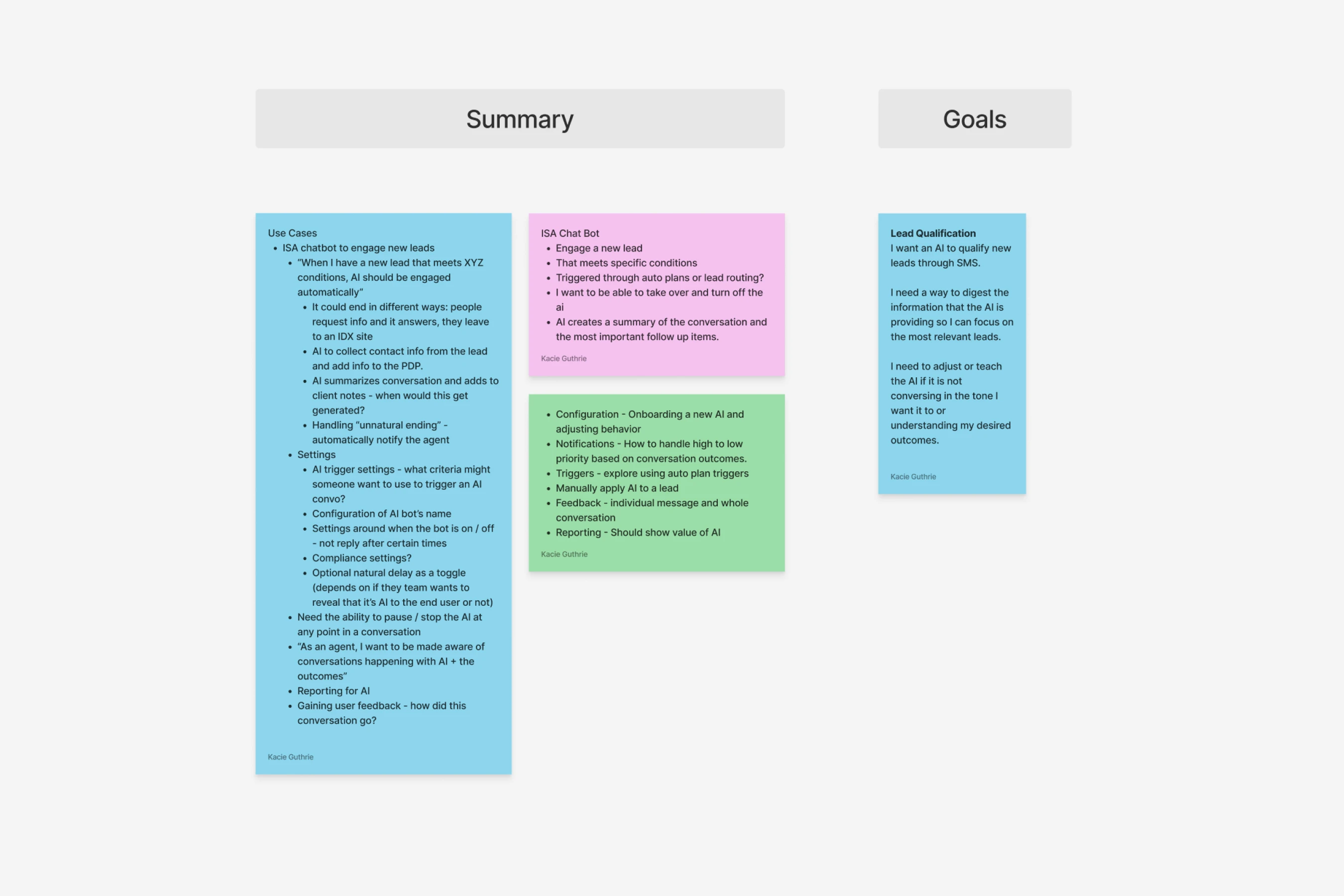

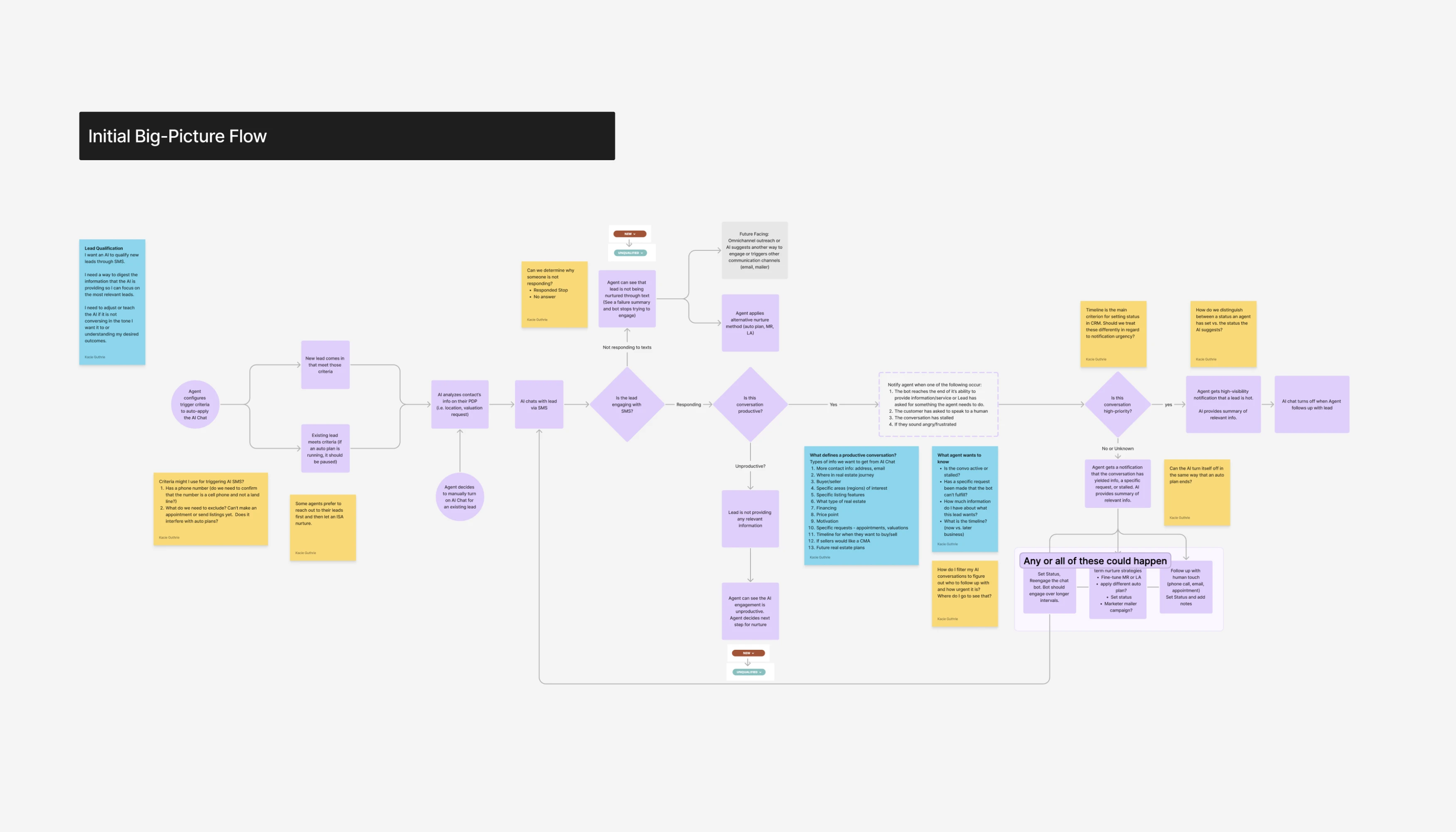

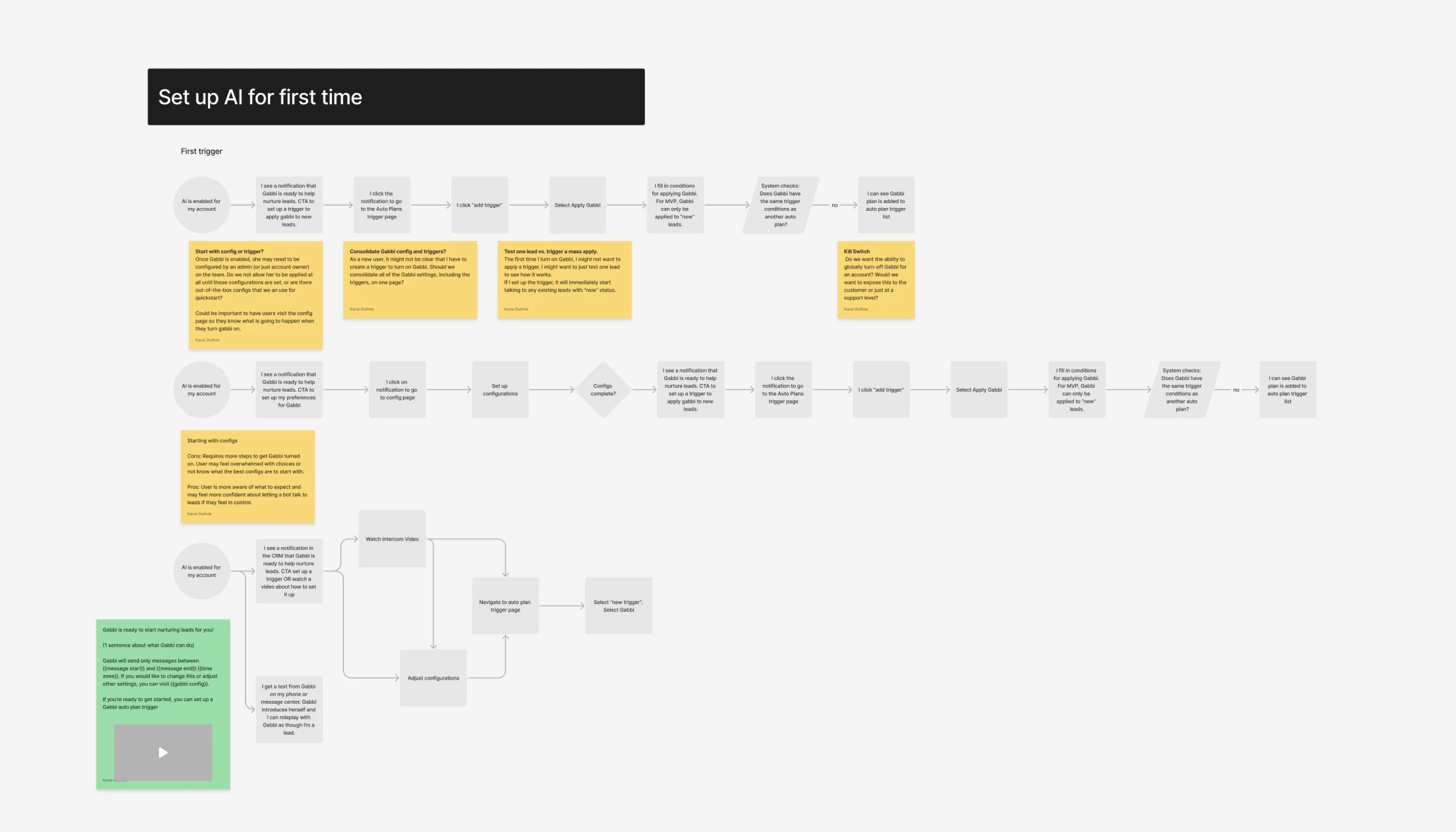

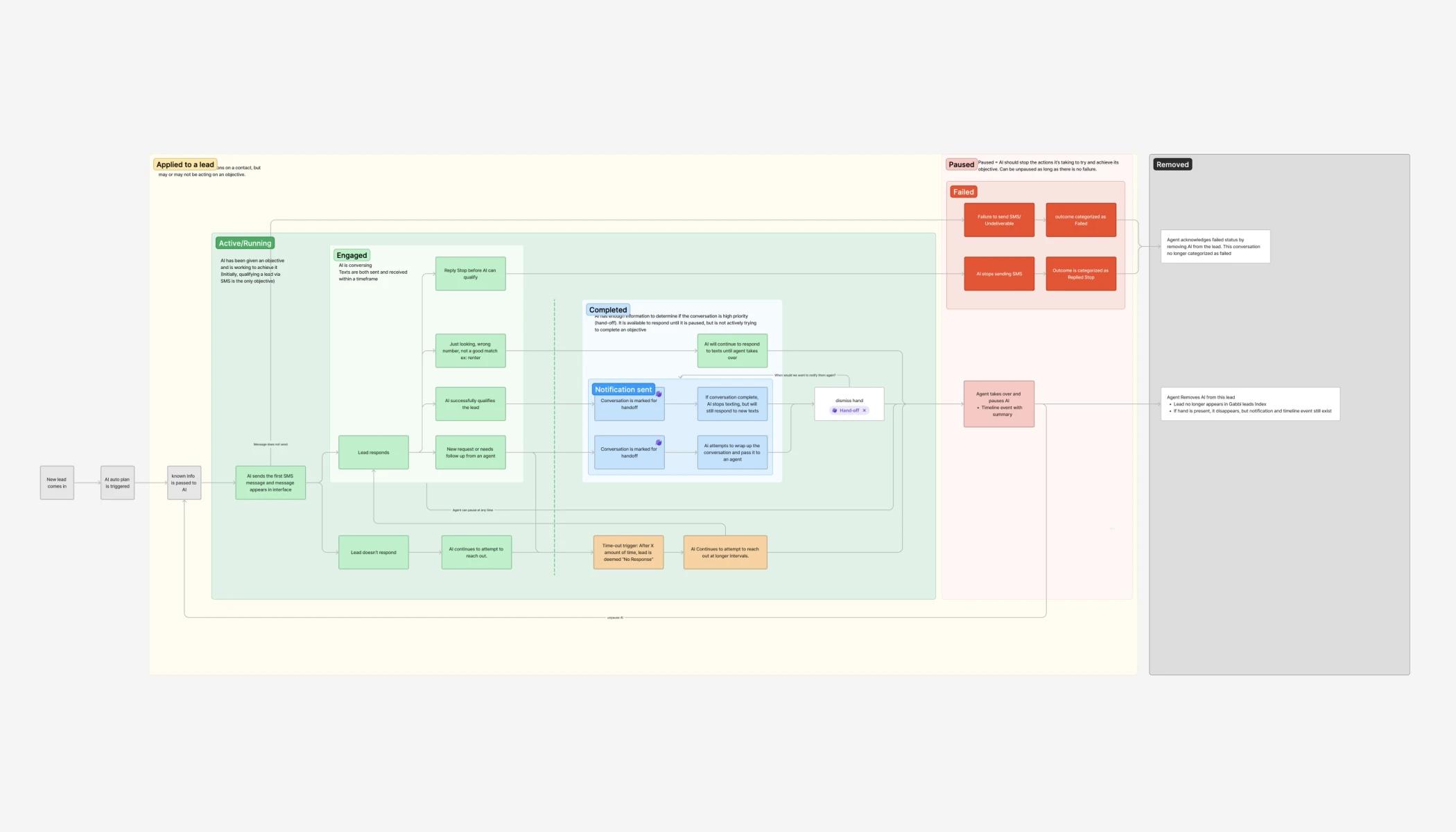

The introduction of AI and what it promised revolutionized nearly every industry in 2023, and the real estate industry was no exception. Our stakeholders were quick to imagine how we might use AI in our products. One of our product owners and leaders within the company, was quick to build in his spare time a prototype of a very basic chat interface where you could interact with AI as if you were the lead and it was the real estate agent. This prototype showed that AI could qualify a lead via a text message conversation. It would ask the lead the primary lead-qualifying questions (LPMAMA) of where they were looking for homes, and at what budget (etc.). This prototype was shown at a PLACE Partner conference and to our CEO in early 2023 and sparked quite a bit of excitement to integrate a lead-qualifying AI-ISA into our CRM. It showed the potential to save agents hours of lead gen time every week. So we were off to the races to define scope and research what the evolution of this feature might be.

Role

UX Researcher

Lead UI Designer

Resposibilities

UX Research

Release Planning (PRDs)

UI Design

project length

6 Weeks (off and on)

Starting Out

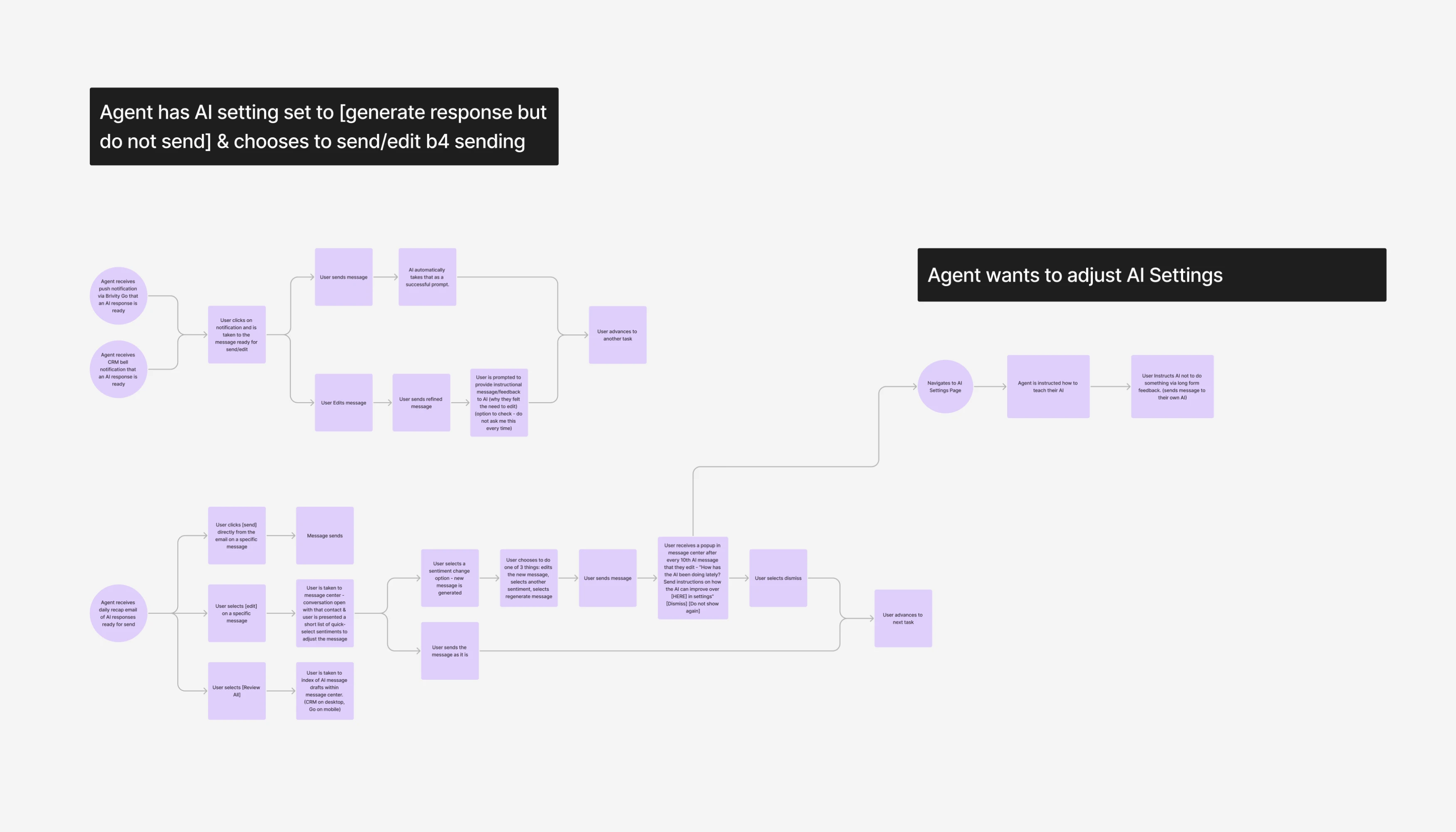

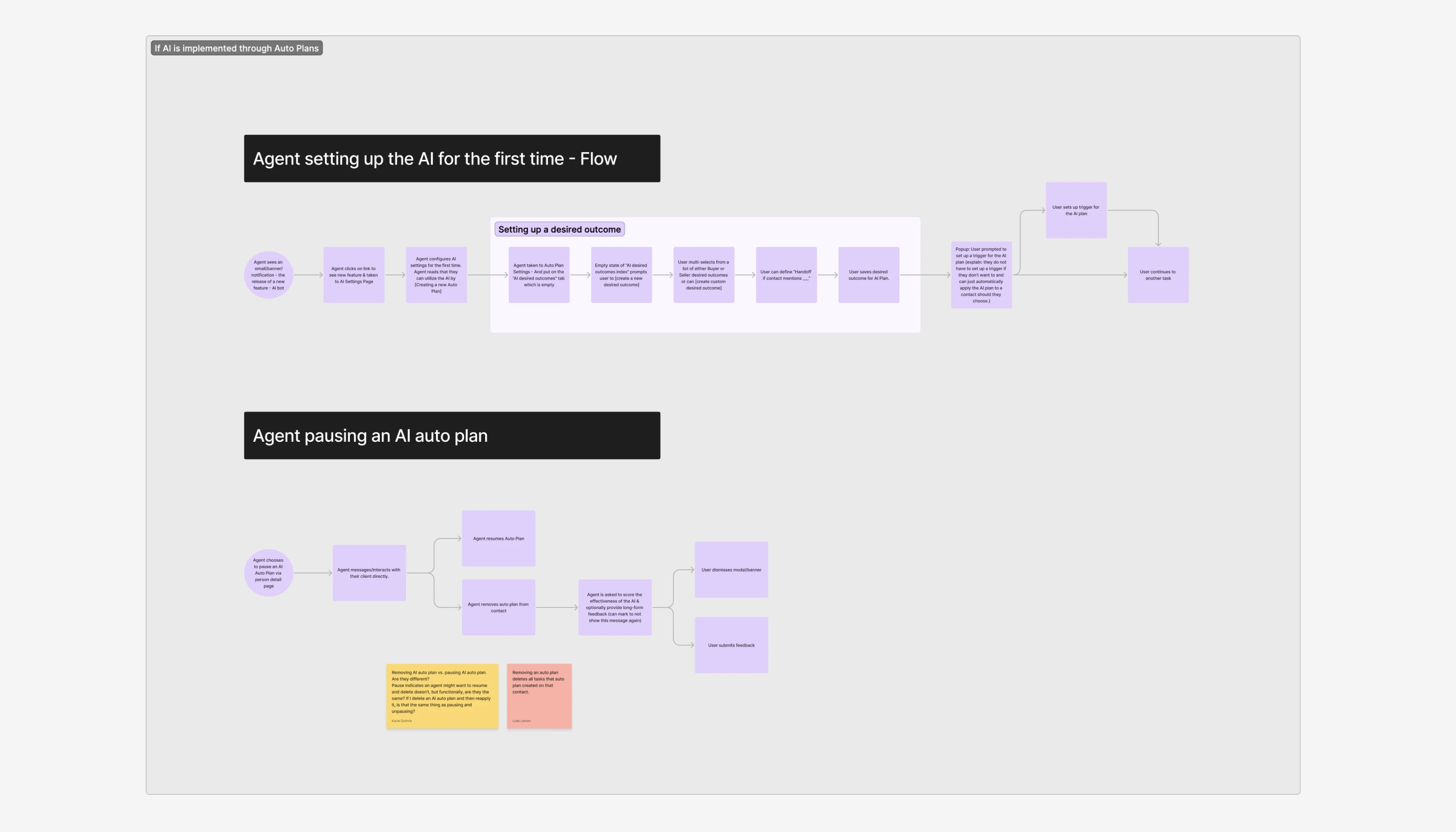

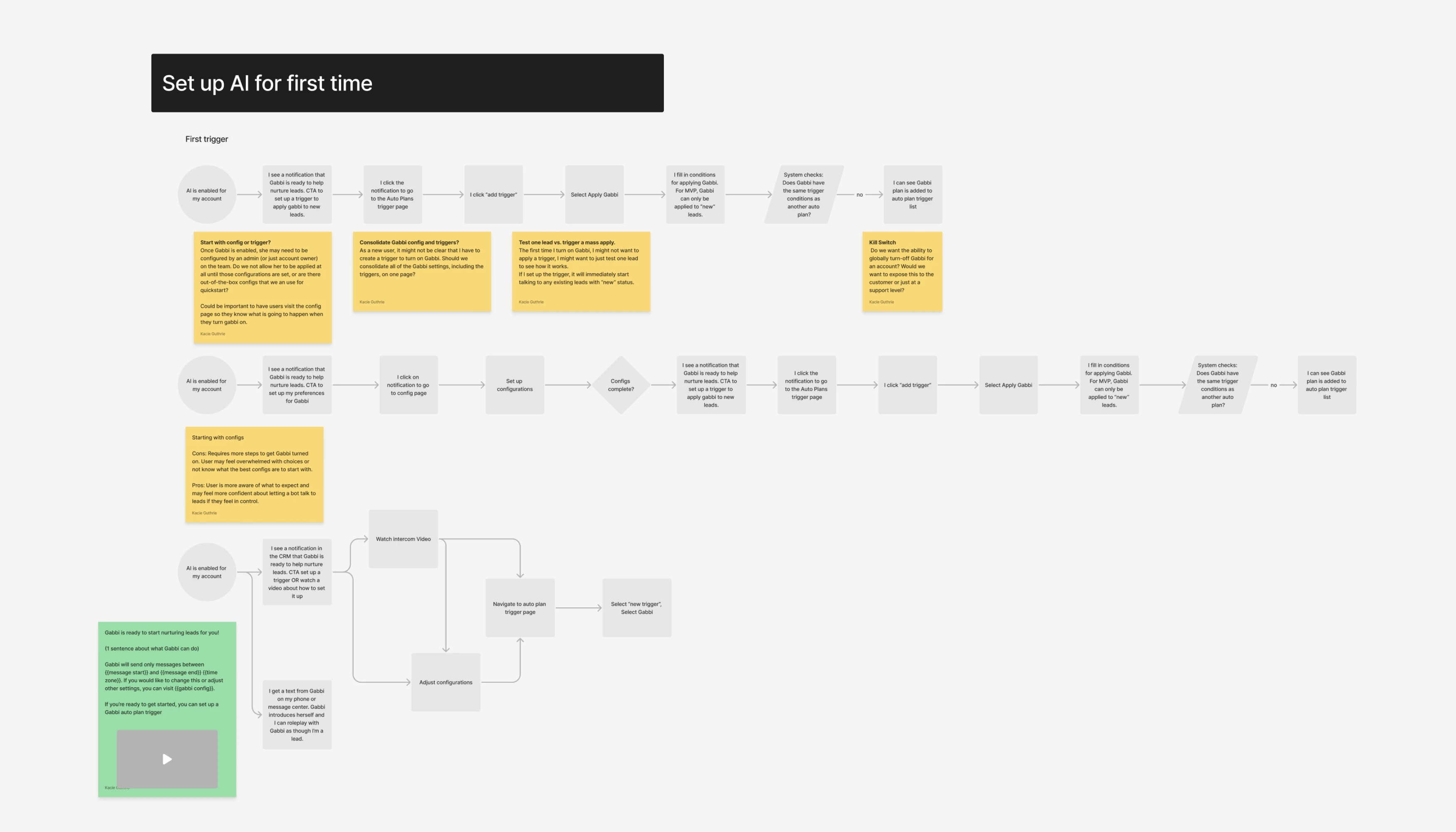

User Flows

Further Research

Design Exploration

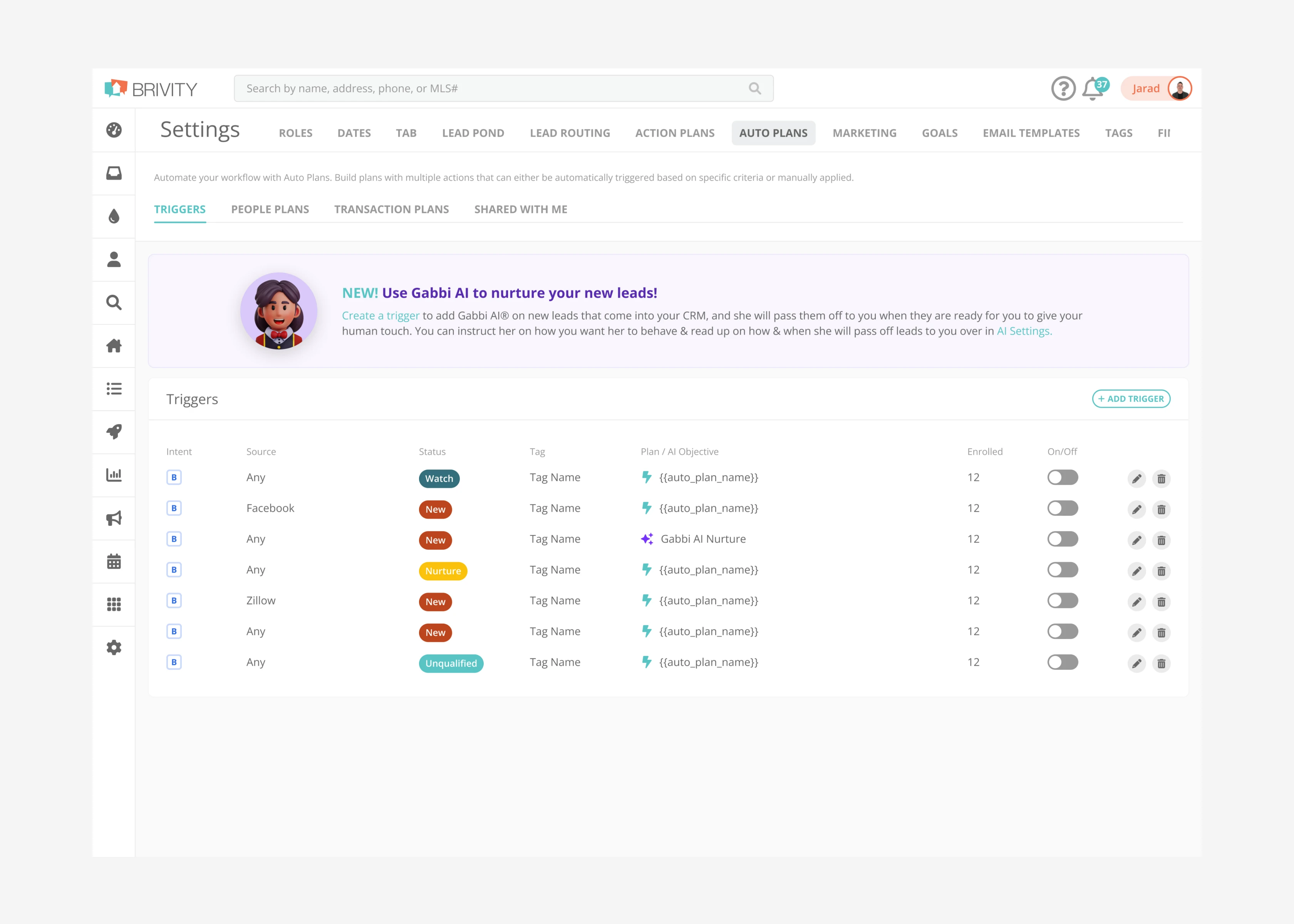

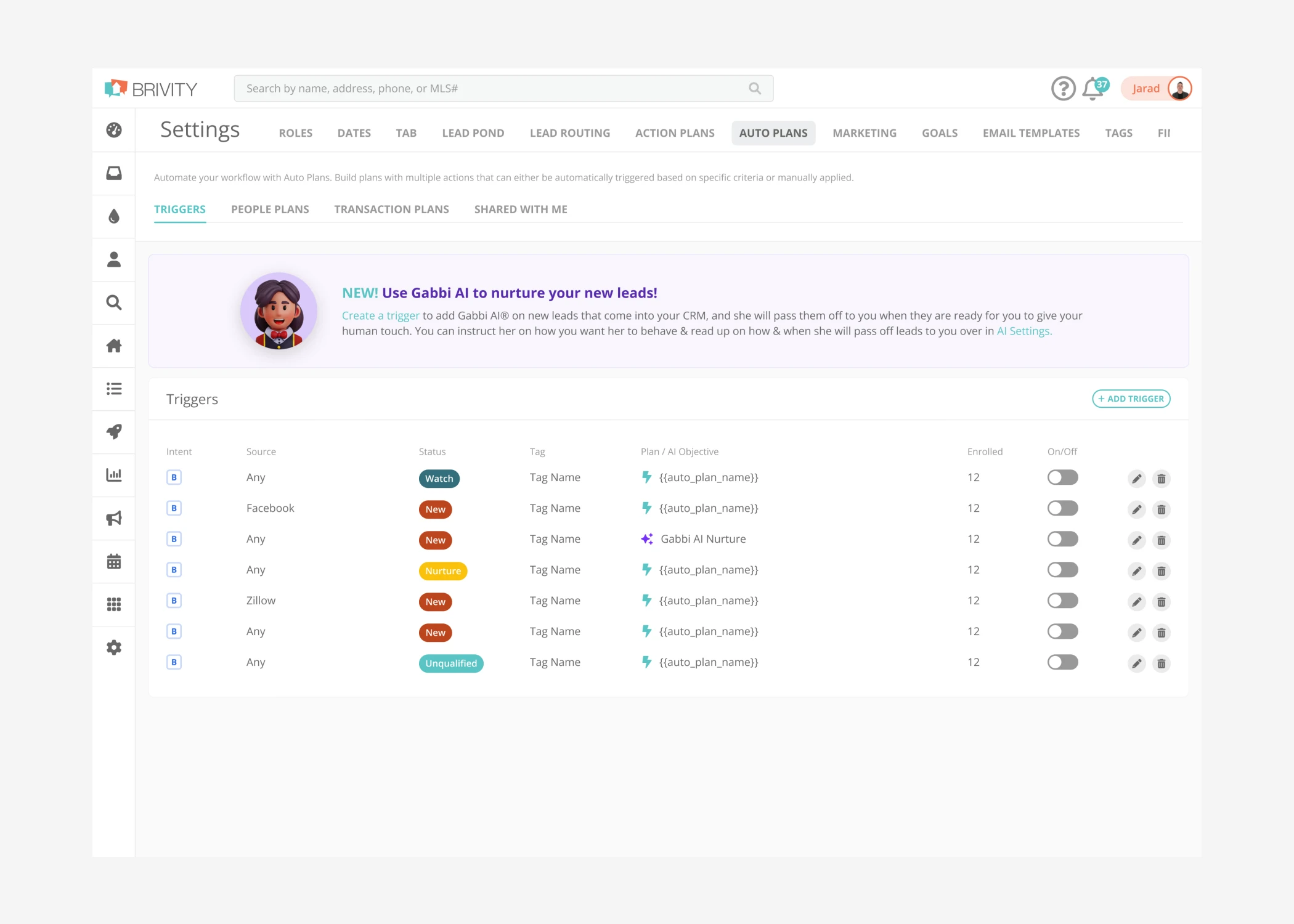

The PRD, largely flexible in the beginning, gave us enough of a direction that we would begin to visually explore integration in our CRM, in order to gain further buy-in from stakeholders on the scope of these releases and that we were headed in the right direction. We were specifically asked in the beginning to explore blue-sky (far-out) explorations.

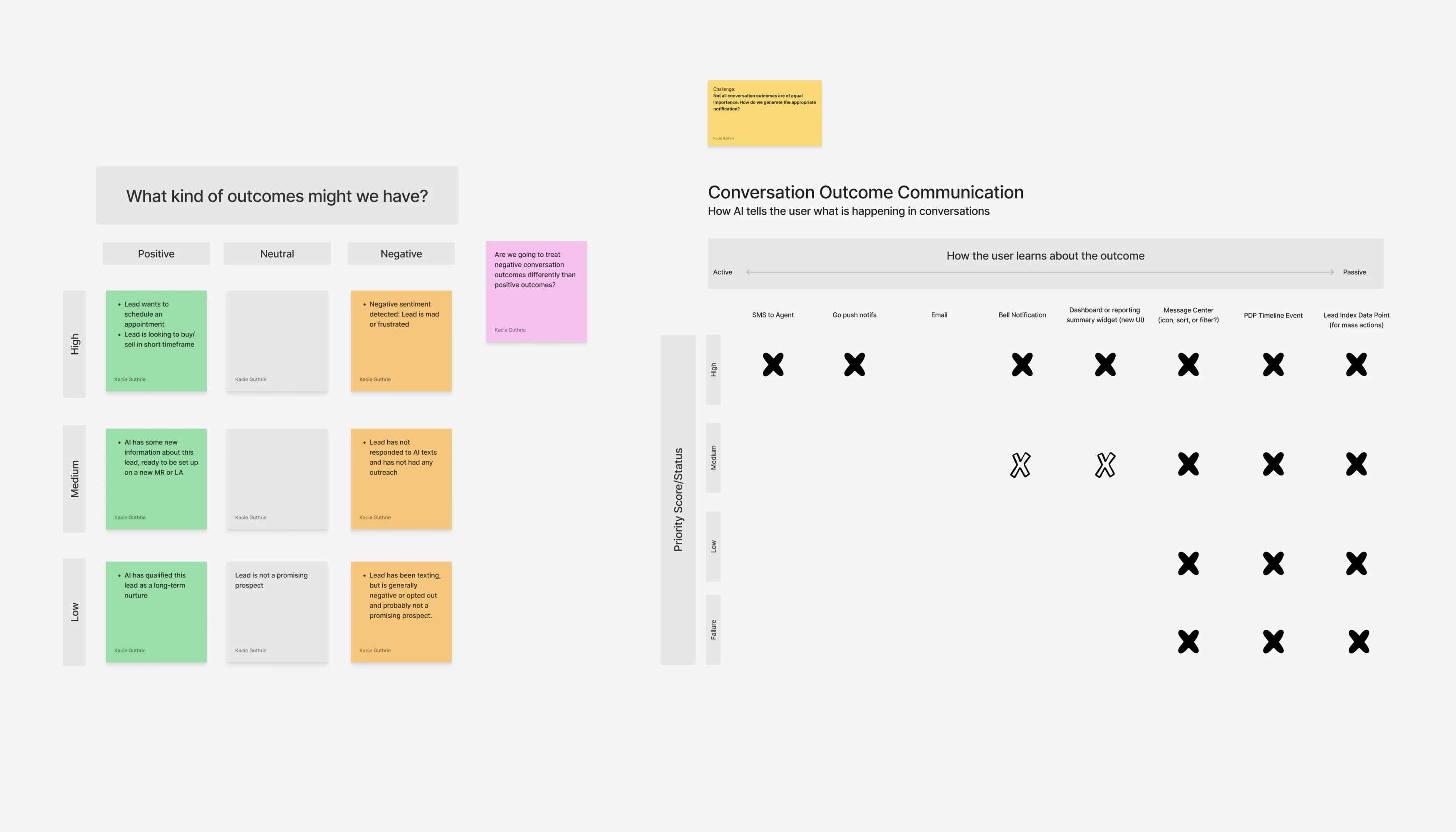

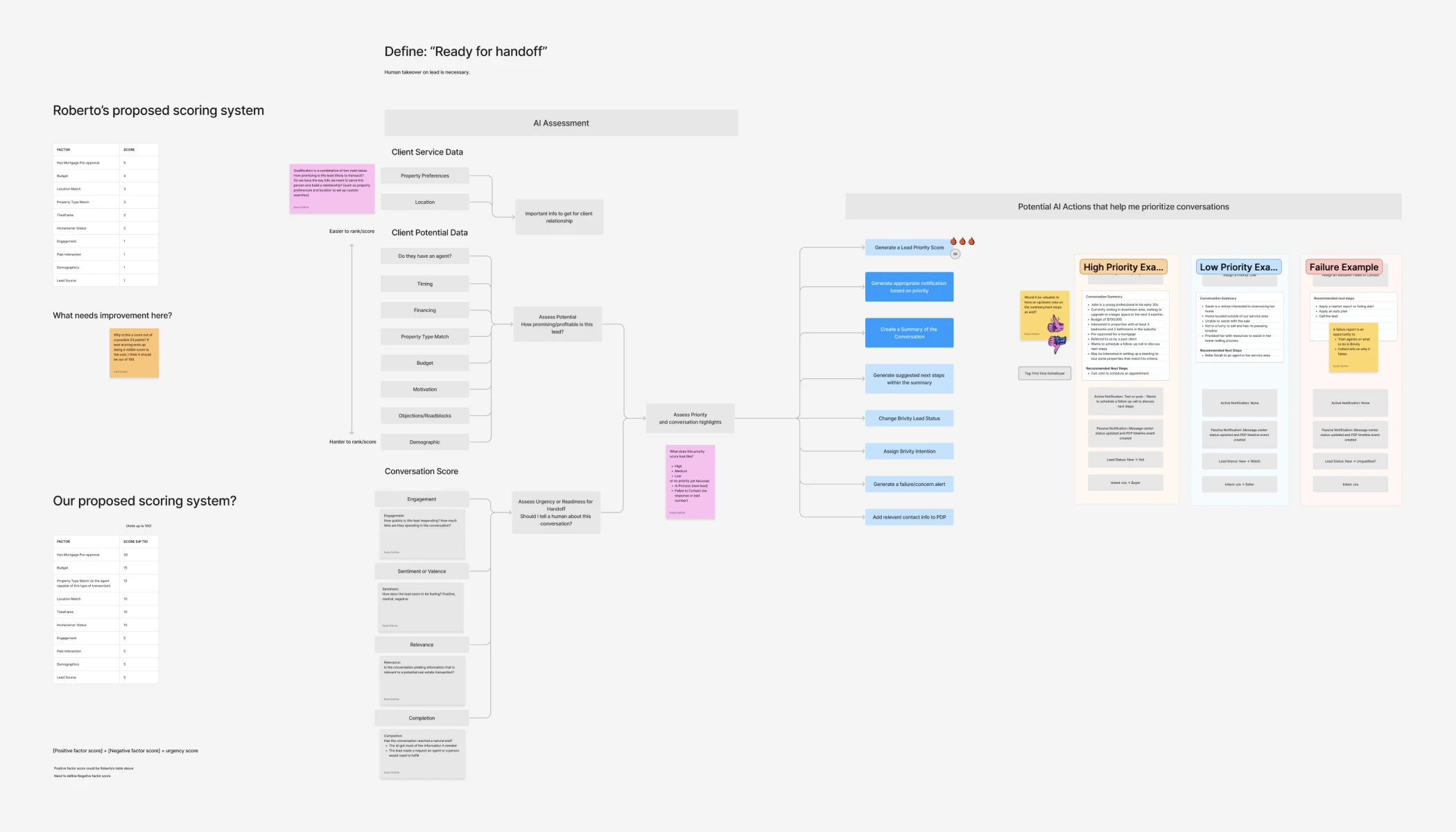

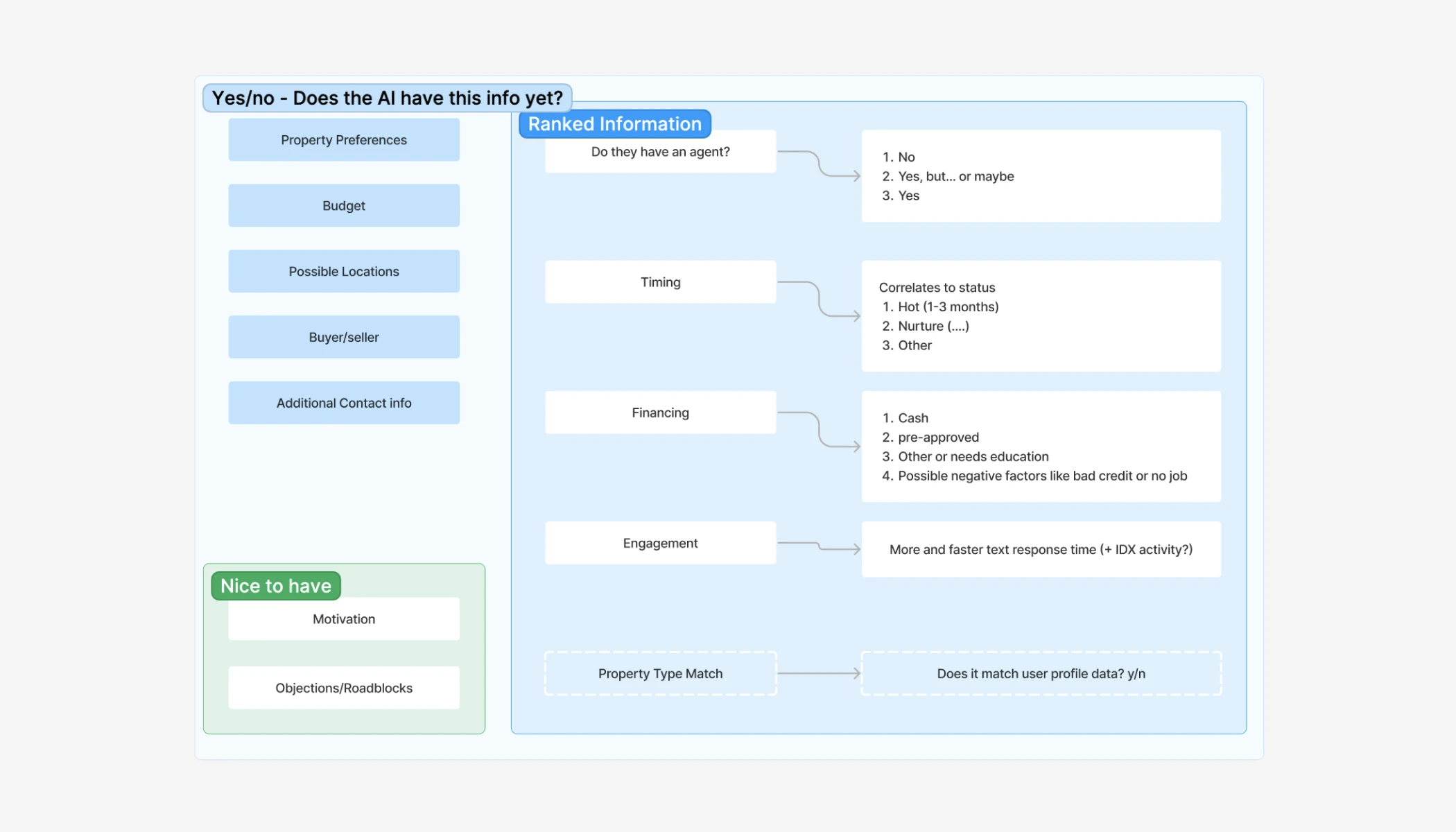

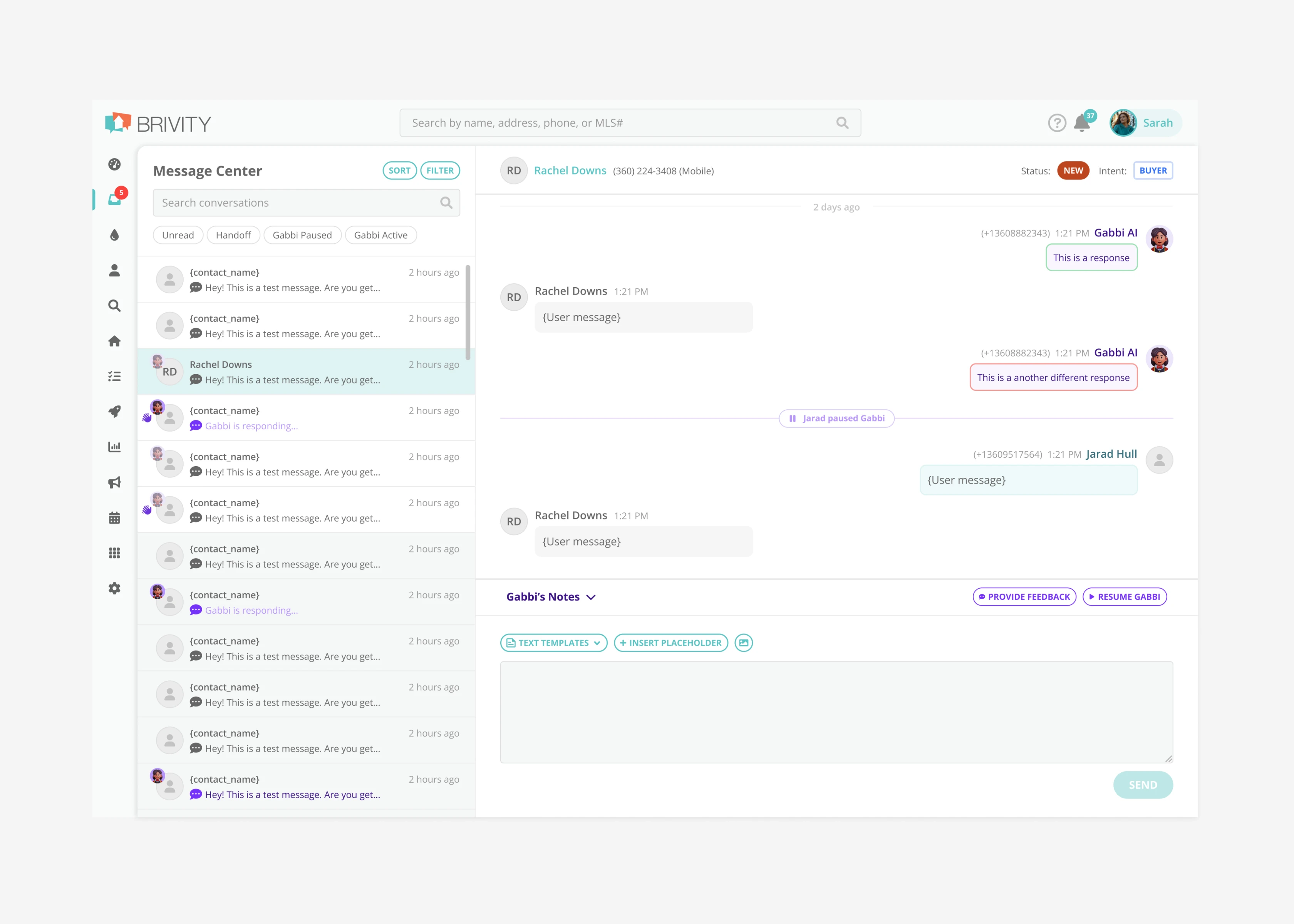

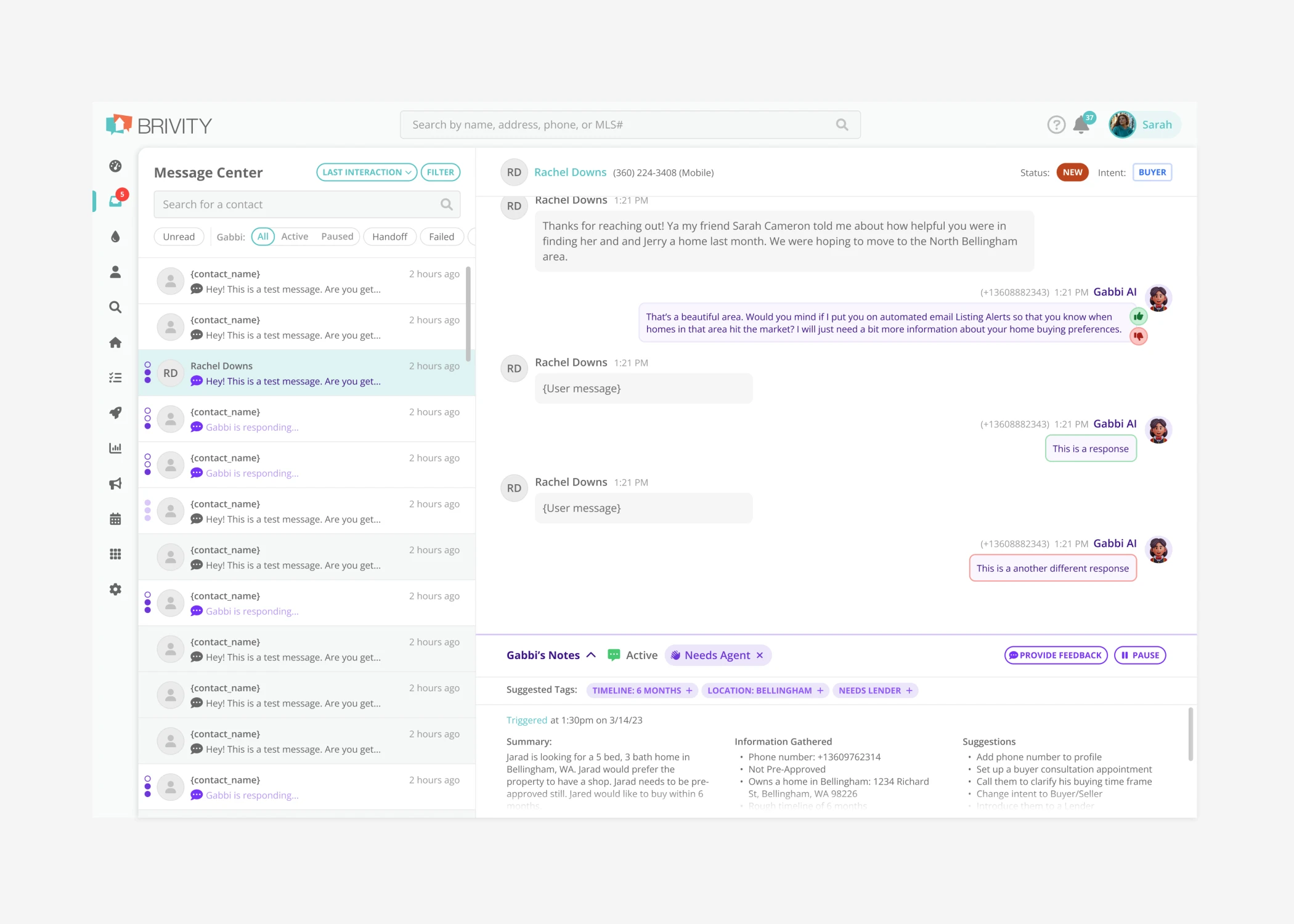

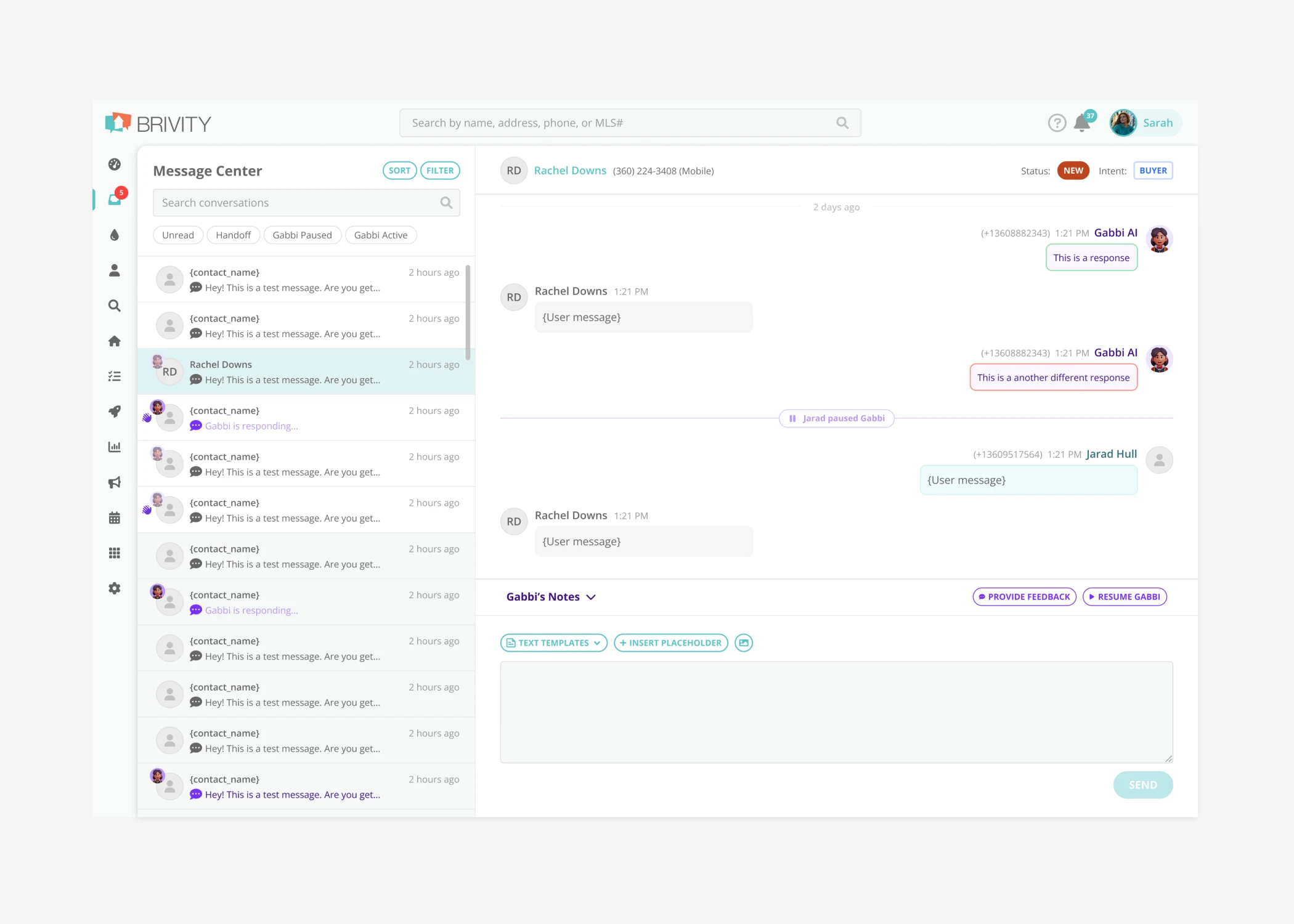

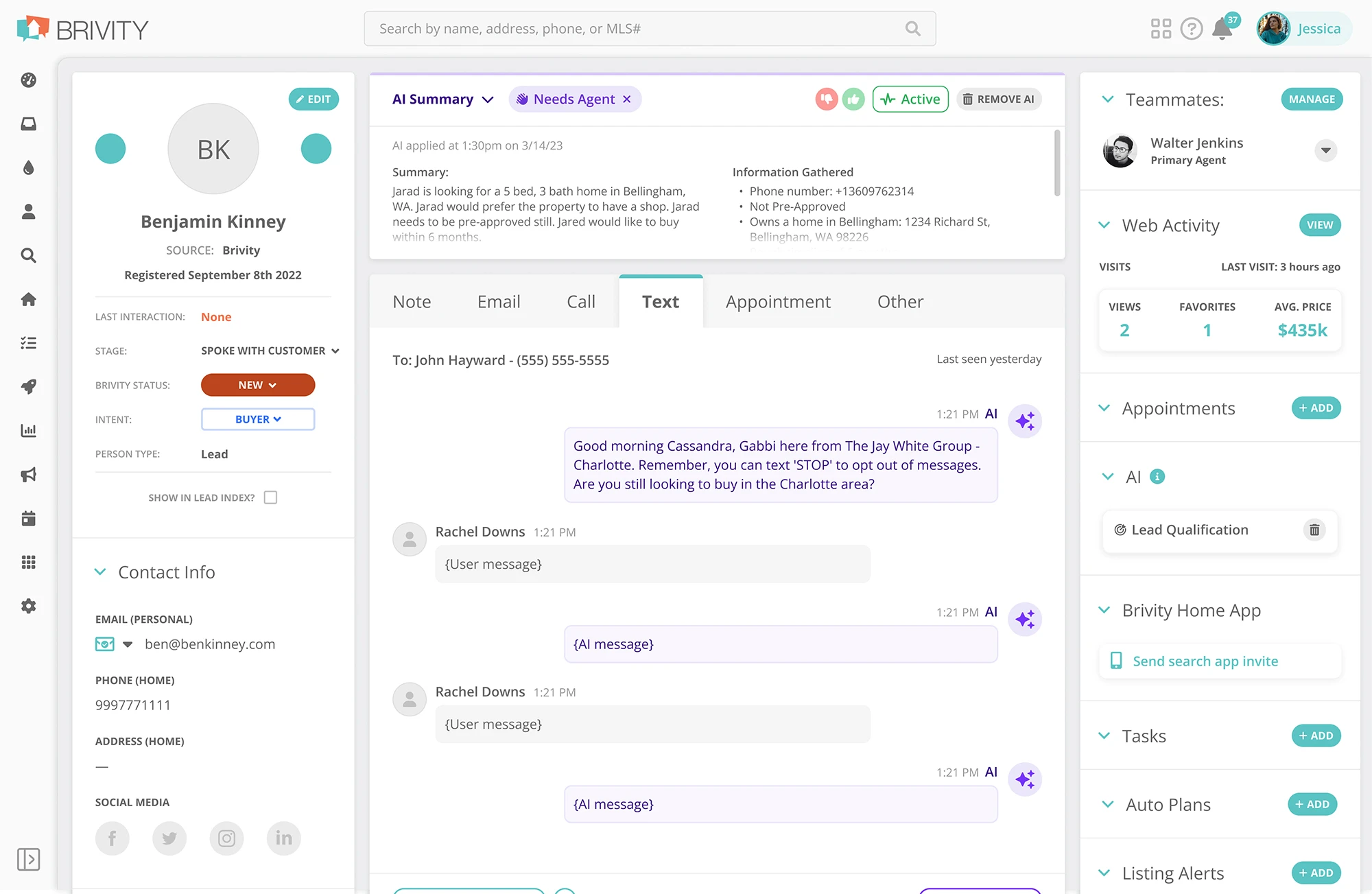

Our early releases tackled Message Center (an area of our product which was already being primarily used for initial contact with new leads) starting with the ability to filter out AI-applied conversations and indication of an active or paused AI on the lead, as well as conversation summaries, and surfacing AI-Flags as a way of the AI telling the user the conclusion it has come to.

Beta Release

(The last release I worked on)

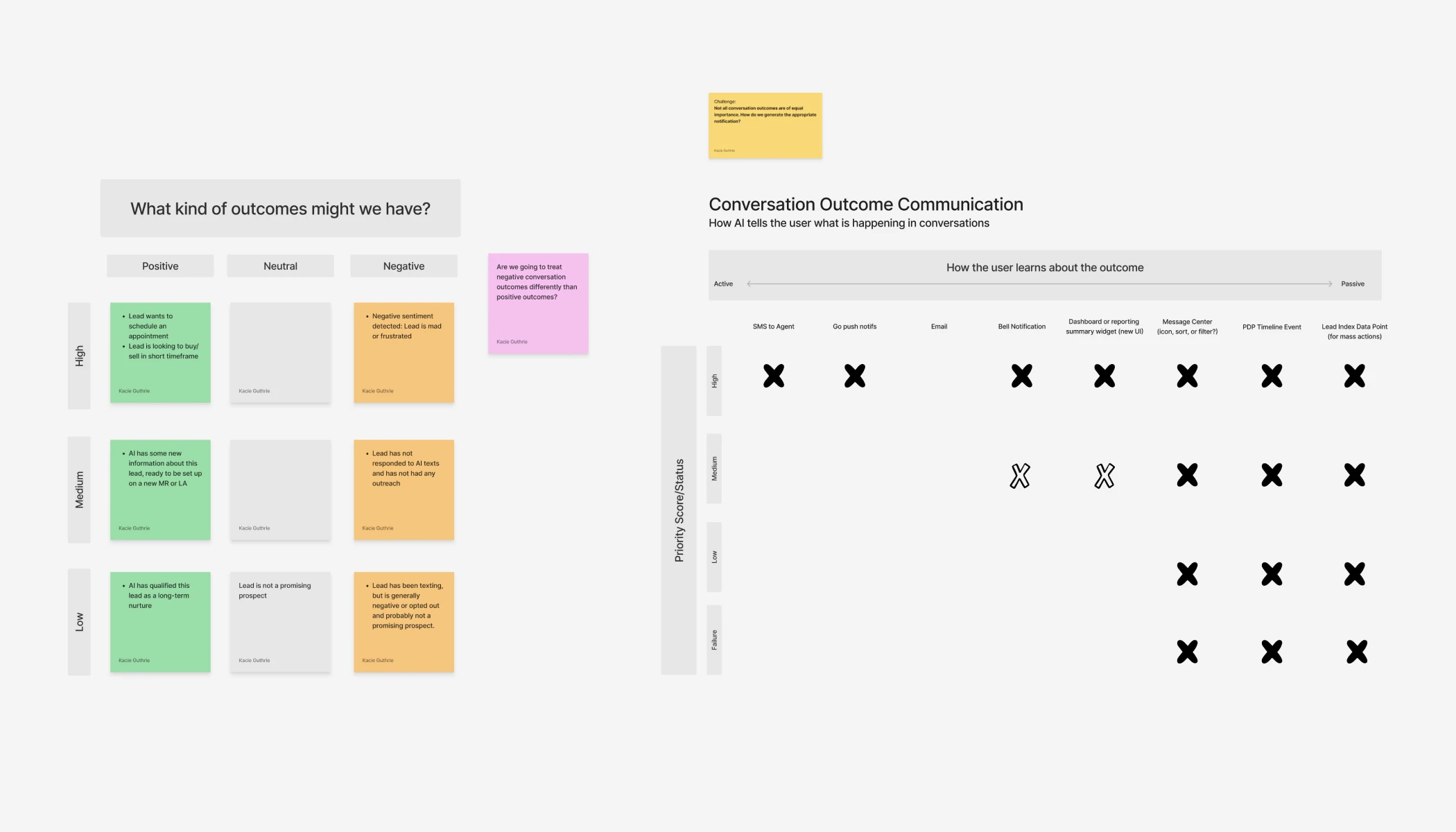

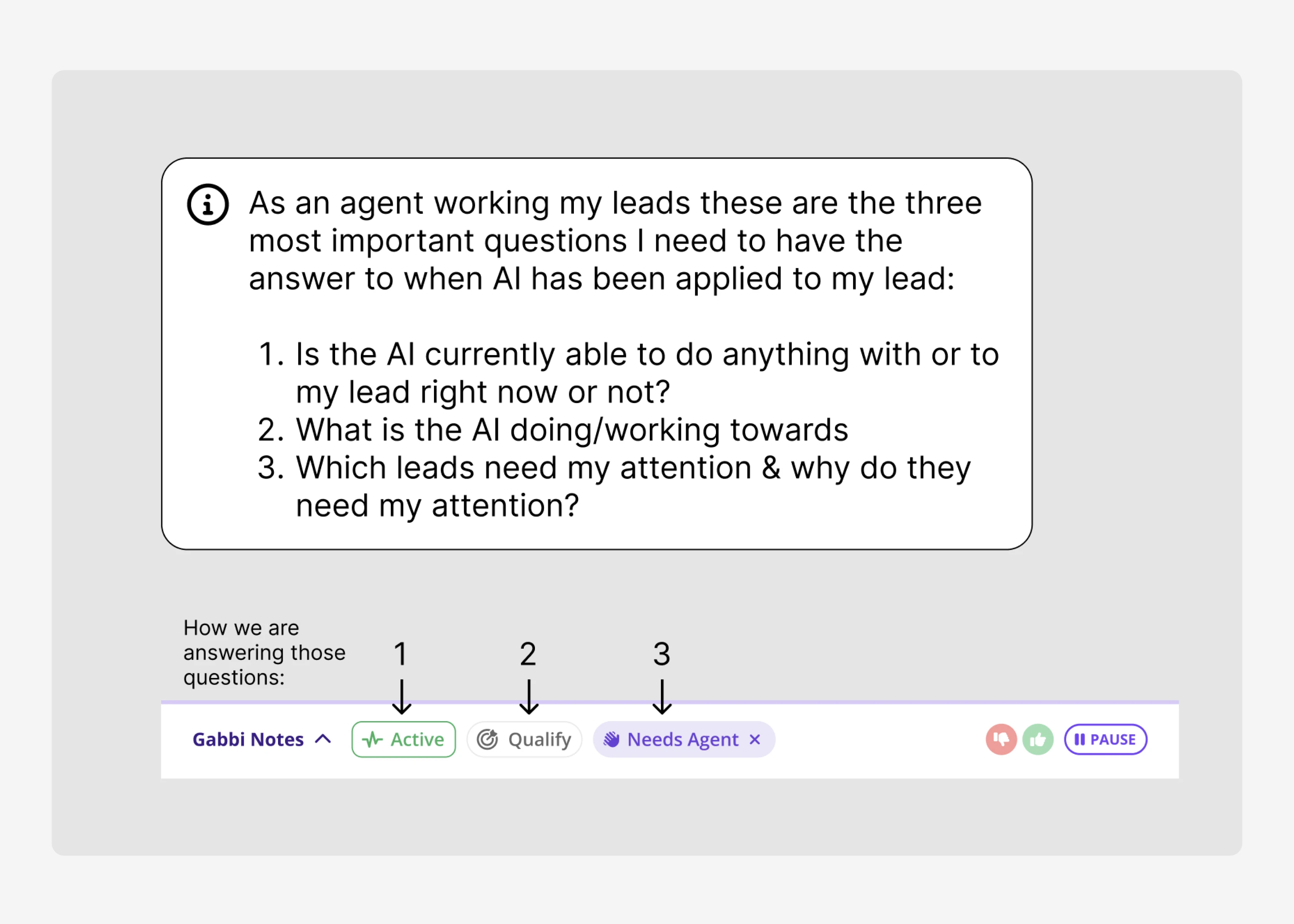

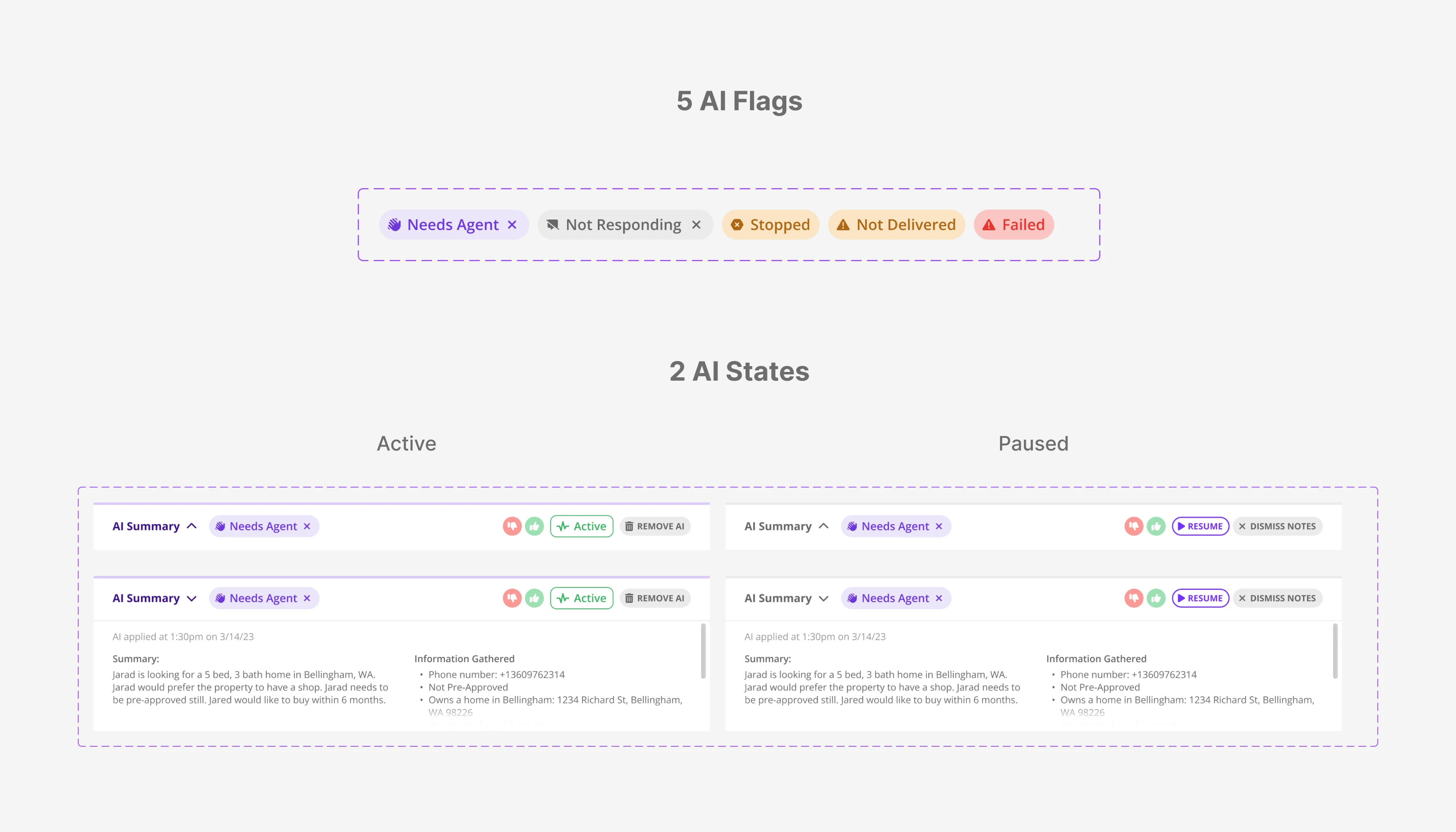

We landed on a solution that covered all of the necessary states and outcomes an AI could be in or run into. That solution was two AI states (active & paused) and five AI flags (Needs Agent, Not Responding, Stopped, Not Delivered and Failed).

We planned on one alpha, one beta, and finally an MVP release. However one alpha release quickly became 3 different releases to our testers as we could fit only a select number of the requirements into different sprints as devs were limited on sprint capacity, busy with other business objectives.

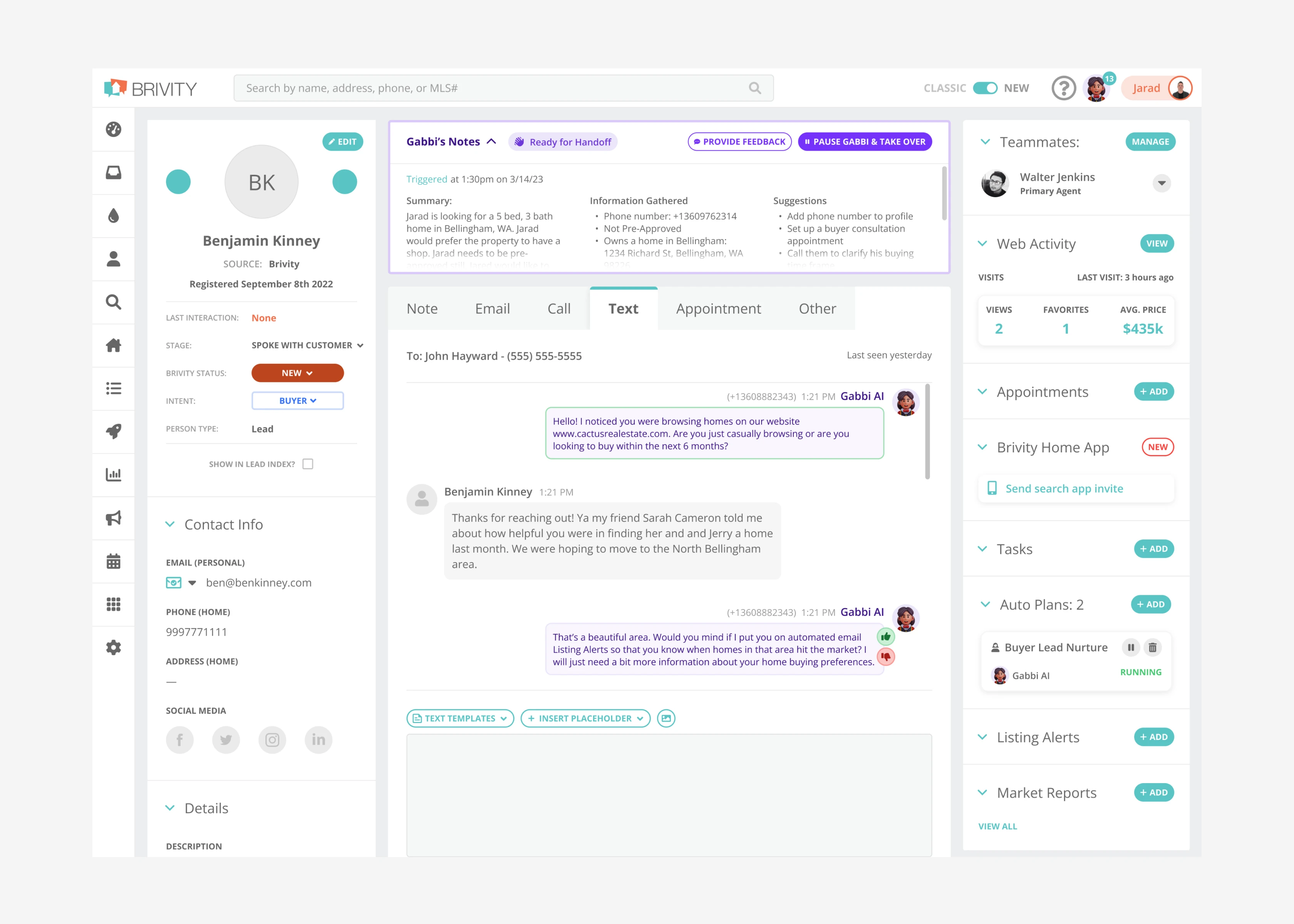

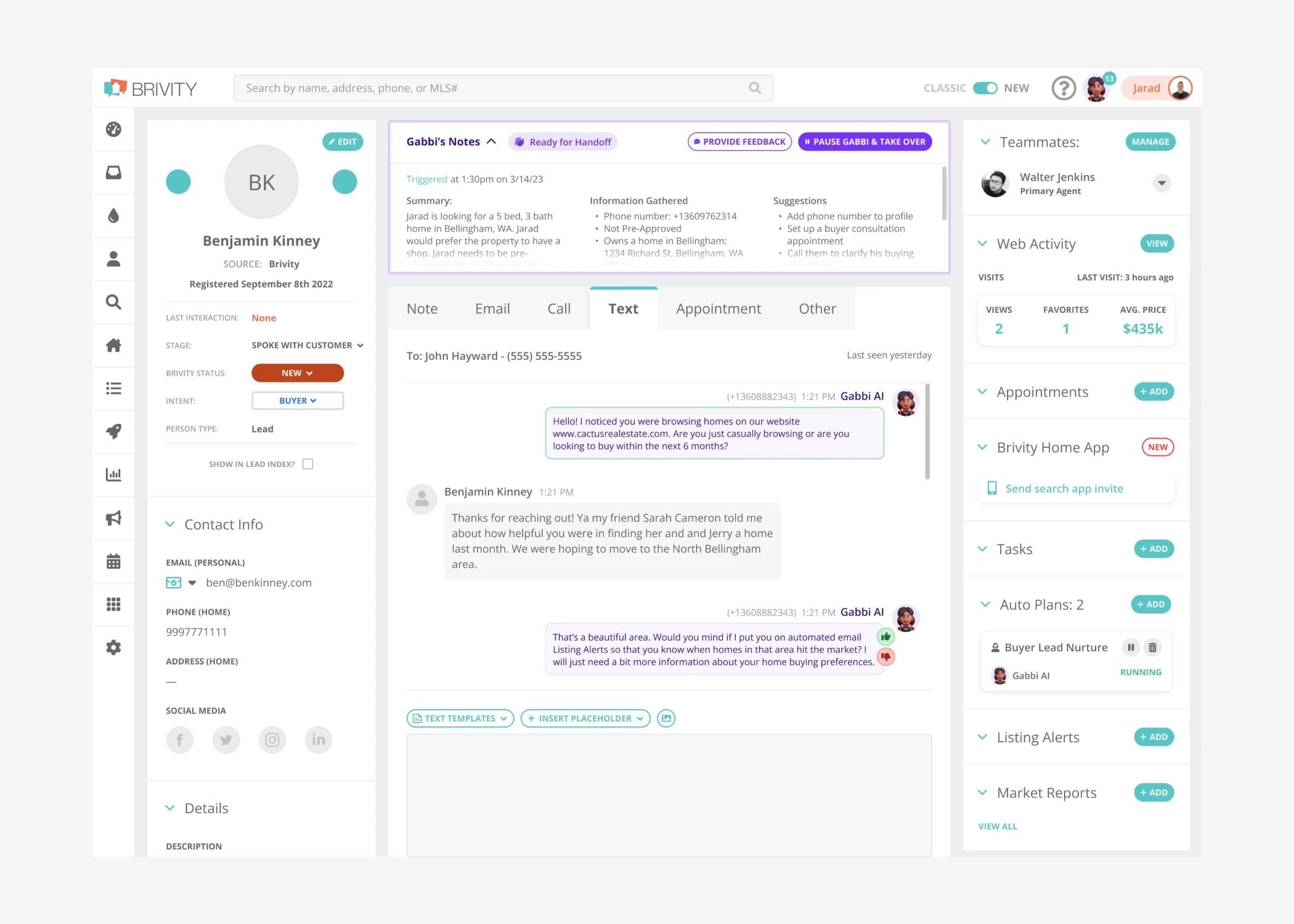

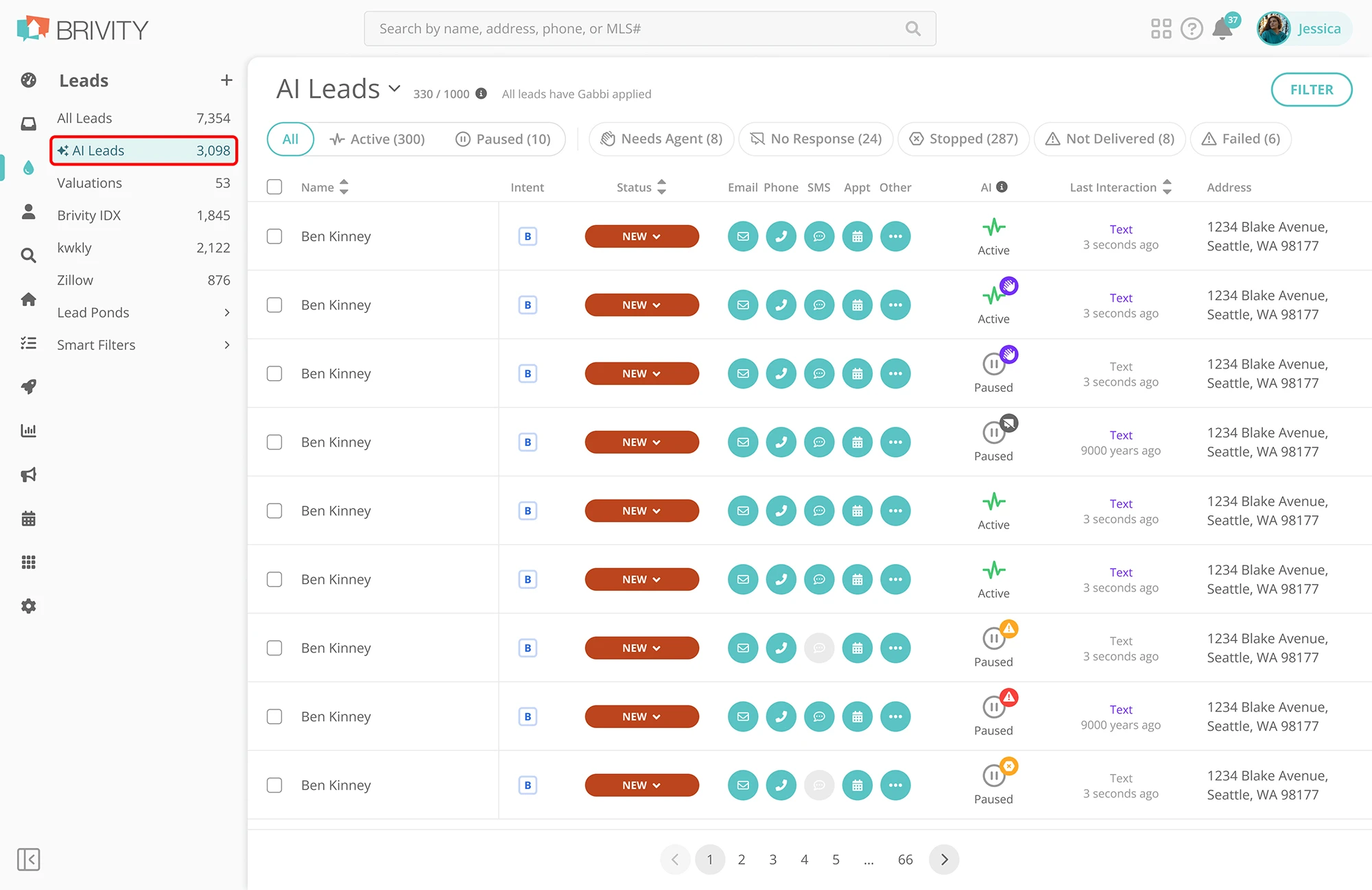

As we began to tackle UI work for the specific releases, we refined big picture concepts of our most highly used screens that we would need for initial implementation. These screens were our Message Center, Contact Detail Page, and Contact Index. I led the final crafting of the UI for all releases.

The Message Center features a new AI tab for filtering the Message Center for AI-applied conversations, AI Flags on conversation threads so the user could visually differentiate those conversations. We added an AI Summary block on AI-applied leads so the user could catch up on what info the lead has captured in its lead qualification objective as well as give us feedback on how the AI was performing for them. We also visually differentiated AI-sent text messages so the user could clearly look back in a thread and see what the AI sent vs what they have sent.

The Leads Index features a new lead group category: AI Leads. On this particular view of the lead index, a user would see various AI filtering options you see at the top of the screen (the text toggle component + filter pills). We also added an AI column, so the user at a quick glance could gain clarification of the AI's state and if the AI has given the user a flag on the AI-applied leads.

The Contact Detail Page features the same AI Summary Block as the Message Center, strategically positioned at the top intentionally to changing the position of that lead's interactions. We want the user to catch up on what the AI has gathered, and stop/pause it before interacting. Additionally, an AI block was added to the right of the page, that sets us up for the future when we would add other AI objectives other than lead qualification. As per requested by a stakeholder, the empty state for that AI block on the right would have a large "Add AI" button spanning the width of the column, to increase feature adoption.

Gathering Insights

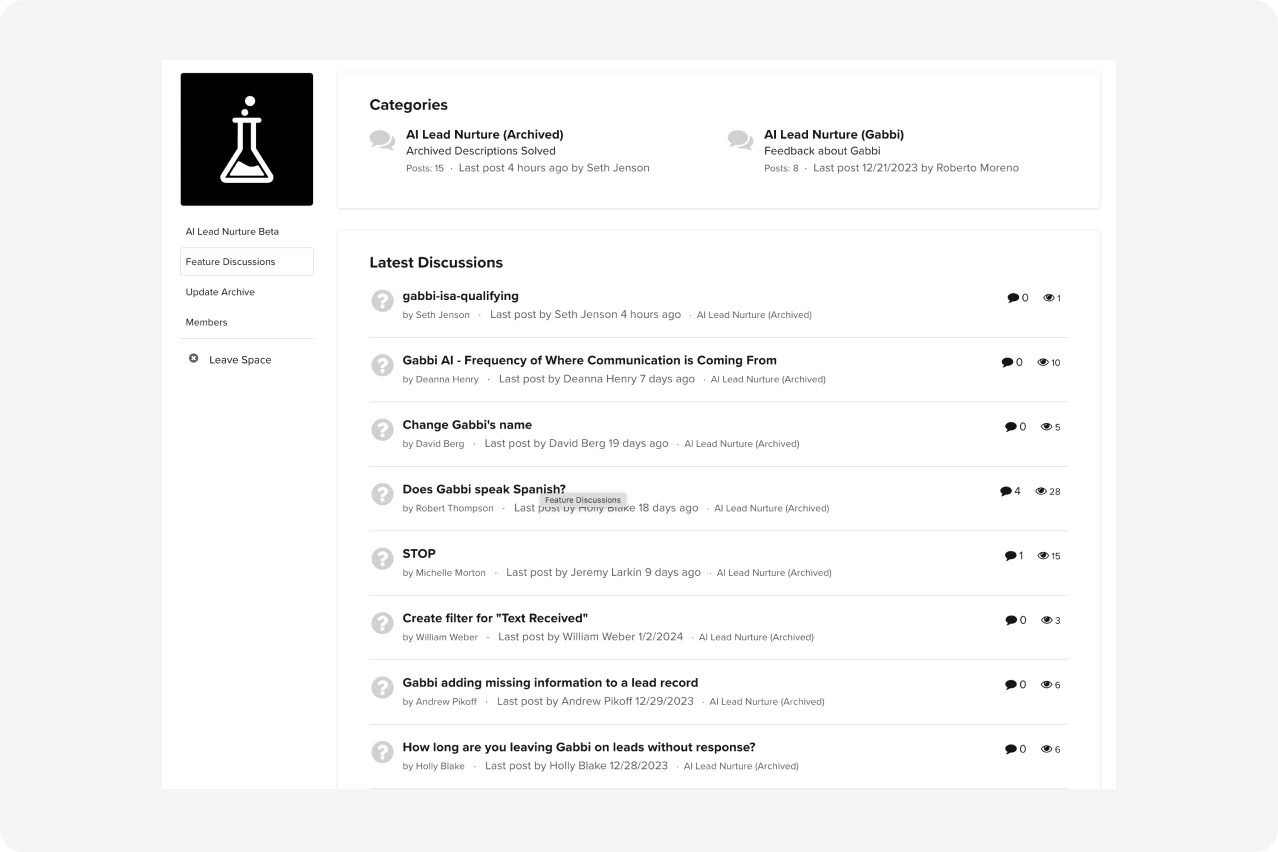

Early on we knew that we wanted to test our first alpha and beta versions on a select group of users and gain insights way before we ever released this feature to our entire user base. There is a level of volatility and unpredictability the AI was going to bring into the lead qualification process, and from the very beginning we defined a primary goal of building trust within our users to increase feature adoption. We crafted a forum on which alpha and beta testers could submit feedback, and provide us examples of unwanted AI behavior, so that our devs could further refine the prompt we were using, or set up new guardrails.

FAQs

Discussion Board

Feedback Center